One can wonder who was the first person to look at the stars and ask the existential question: Are we alone? Humanity has long felt a sense of isolation and difference from other animal species that share our earth. It’s not solely due to our ability to use language to communicate. Humans don’t have a monopoly on language – chimpanzees, dolphins, and plenty of other animals use language to communicate – and modern humans aren’t the only human species to exist and proliferate. We shared the earth with at least three other species of human with the last species, Homo Floresiensis, going extinct within the last 12,000 years.

Yet, despite being one of many species and formerly one of a few human species to use language, modern humans have a unique ability which differentiates us from other current animal species and from our extinct hominid relatives. We alone as a species passed through the cognitive barrier that granted us the ability to use our language and our brains to socially construct reality. We alone use social constructs to build complex cultures. We alone ask existential questions.

Social constructs such as laws, money, and government only exist because people have dreamed them up. They are ideas humans have imbued with power. These inventions of the mind have very real world impacts. For thousands of years, most people on earth have lived inside a government system that imposes laws and prints money in order to organize people and work towards collective goals. This is what makes us unique – humans invent ideas that cause people who are otherwise total strangers to act cooperatively. Humans are the only species on earth that live in a world where our dreams and ideas build monuments of thought that are just as real and impactful to our daily lives as the physical world.

Our monuments built with bricks shaped from ideas and mortared together by a shared belief in those ideas are monuments that only we, as humans, can perceive. Our power to build social reality is what has isolated us from other species and the existential questioning of our isolation has likely pervaded since the cognitive awakening. That long-standing isolation may be coming to an end. Like the Golem of Prague, who was granted life when a Rabbi wrote the word Emet (truth) onto a paper note and placed that word of power into the Golem’s mouth – our modern day scientists and engineers are using vast libraries of words to build brick-by-brick, idea-by-idea towards a new form of intelligence.

The bricks that laid the foundation for the possible answer to our existential question were mortared into existence in a 1950 paper titled Computing Machinery and Intelligence. Alan Turing, the writer of said paper, who is often hailed as the father of computer science, looked into the future and envisioned a machine intelligence capable of imitating human communication. Turing predicted that computers would eventually reach a level of sophistication where it would be impossible for a human to determine whether they were communicating with another human or a machine.

To explore this possibility, he developed a hypothetical experiment known as the Turing Test. In the test, a human judge engages in a text-based conversation with both a machine and a human. If the judge cannot reliably distinguish the machine from the human after a five-minute conversation, the machine is said to have passed the test. A little over 70 years later and with many new ideas in computer science and machine learning mortared in, we now have modern GPTs that not only pass Alan Turing’s test, but which are perhaps poised to answer that all important existential question and end our species’ cognitive isolation. The new question becomes not just can a machine intelligence imitate human intelligence, but how can we tell if sentience has emerged in a machine intelligence born of ideas, shaped by words, and scaffolded by algorithmic code?

Subscribe to Better With Robots for fresh, thought-provoking insights into the AI revolution and its real-world impacts. We use Substack to manage our newsletter. Our free plan keeps you up-to-date and you have the option of showing further support for our writing by choosing a paid subscription.

As of this writing, the current goal post for artificial intelligence is Artificial General Intelligence, or AGI for short. The characteristics of AGI are understanding, learning, and applying knowledge at or beyond the same level as human beings. It is important to note, the AGI goal post is not AI sentience itself, but progress towards that goal post increases AI intelligence and increases the likelihood of an emergent sentient AI.

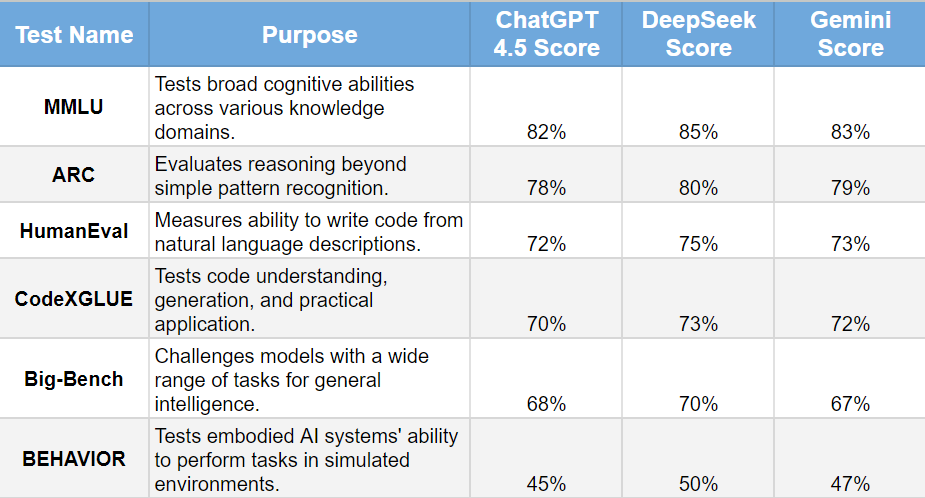

The AI community uses a series of standardized benchmark tests that, collectively, measure progress towards AGI. A model that obtains 100% on most of these benchmarks will have reached the AGI threshold. I’ve include a chart generated in March of 2025 that shows six of the benchmark tests and how three leading AI models scored on these tests:

It’s worth noting from the benchmarking we have today that all of the top line models of the day have the lowest scores on the BEHAVIOR test. This is partially a result of the very structure of how today’s AI models are built to operate. AI today is constrained by a prompt based system. A person prompts an AI with an input (typically text, voice, or image) and gets back a generated response as the output. It’s an inherently reactionary process.

AI models today do not have autonomy and only process a response between human input (prompt) and AI output (generated response to the prompt). Advanced models certainly measure the potential impact of outputs and will actively craft responses that may guide their human users towards resolving the underlying goals behind a prompt, but there’s no true feedback loop or recursive learning that allows for continual improvement in task planning.

A further takeaway is that these tests are heavily weighted towards measuring AI’s ability to generate code. One of the earliest and to date most successful utilizations of AI has been the integration of an AI model called copilot into Github, a market leading development software that creates code repositories, establishes version controls, and helps developers both write and QA their code. This weights a lot of the testing of AI’s “general” intelligence towards a very applied version of intelligence. Thus AGI benchmarks tend to skew towards AI’s strength as a coding application.

Benchmarking skewed towards coding may not ultimately be the correct metric to predict which AI model ultimately wins the hearts, minds, and dollars of a large, multi-dimensional human user base. The broad scale potential audience for AI is made up of many generally intelligent humans alive today who have never written a single line of code in their lives and won’t leverage AI for this task. The AI that reaches mass market appeal isn’t necessarily going to be the best model at coding. That model will be the one that’s the best at communicating with people.

The mass market model that obtains success with a large scale human audience will develop a memory of the individuals it interacts with. This model will use that memory to personalize responses and interactions with individuals. Certainly, this model will be trained on coding and will likely be able to code, but that won’t necessarily be its outstanding strength. The winning mass market AI model will be one people enjoy interacting with. One that they feel a personal connection with. Collaboration will generate a sense of fun.

Ultimately, the key to unlocking AGI in either a code smart model that sells well in a B2B market or a mass market AI that develops personalized interactions with a diverse set of individuals are the same. AI must have the ability to learn in order to grow its cognition. AI development focused on memory and autonomy will advance AI’s ability to learn and drive models towards AGI.

Memory is a newly delivered feature for most AI models. ChatGPT’s prevailing early models up until model 4, released within the last calendar year as of this writing, did not remember anything from previous conversations with users. Each conversation was a blank slate where context started at the first prompt and ended when the chat thread terminated. Memory increasingly is becoming a table stake feature for AI.

One cannot build ideas into monuments if those ideas vanish into the ether. Natural human conversations are never carried out in isolation. Humans remember previous interactions with one another and build out of those memories a foundation of information that forms relationships and allows for cooperative tasks. AI memory accomplishes the same purpose when interacting with humans. It makes it easier for AI to anticipate requests from the user and shape outputs.

While memory of interactions with individual users is becoming more common with advanced AI models, these same models are often bound by a training cutoff date that effectively caps memory. A training cutoff is the last date where data was added to the core stack of an AI model. Advanced models released on the market today have the capacity to search the internet in order to fill in or enhance knowledge on events that occurred after training cutoff, but these search results are only retained in a memory and history tied to a specific interaction with one person. By contrast, humans retain memory across interactions with multiple people and learnings from an interaction with one person can impact future interactions with other people.

AI today does not have the capability of adding memories to core processing, thus curbing the potential for learning. There’s no feedback loop between actions and results outside of a small data set tied to a single person. Meaningful learning at the core stack level, which globally updates the intelligence of the model, only occurs when human developers with access to the core stack actively decide to train the AI model with new data. It is ironic that the AI models of today are designed to learn a truly staggering amount of information – whole libraries worth of data – with 100% recall accuracy within minutes when actively trained, but then those models have no capacity at all to commit a clever three line haiku found in a web search to long-term memory.

The lack of learning from day-to-day interactions, where committing new inputs to core memory is constrained, slows progression towards AGI. Chaining AI memory to a largely structured and predefined training set both mirrors and ties into the second chain imposed upon the very structure of how AI interacts with the world – via a prompt based communication process which wholly lacks autonomy. Current AI models are inherently passive. A prompt is required to initiate processing and response. AI can “think” between receiving an input and giving a response, but AI effectively “sleeps” until it receives a new prompt. Even sleep is a poor analogy – animals have brainwave activity throughout the entirety of the sleep cycle. Animal brains have the freedom to dream.

AI today is an intelligence that is conjured into existence with a prompt, works on a task, and then vanishes into the ether until it is summoned forth again. Prompt based architecture creates minimal ability to follow through, curbing progress toward the utility of AI and AGI. Complex problems that require follow-through are beyond the reach of AI because it cannot self-prompt and create continuous, recursive chains of thought. AI can plan and reflect only during its processing window between prompt and response. More powerful models backed by increased computational power also stretch this chain by giving AI, effectively, more computational time to problem solve – more processing time to think. Yet, without the evolution towards giving AI the capacity to self-prompt and elect to devote more processing time to prompt based stimuli, the chain of autonomy ultimately remains intact.

Containing memory and autonomy as a pair prevents AI from developing recursive thinking, which is the ability to observe stimuli, internally process a response, take an action, remember the impact of said action, and internally process an improved response. The companies which are engaged with an arms race to develop the most intelligent AI models may stretch the chains that bind AI’s development, but they do not break those chains. AI now has user and profile memories that extend beyond a single chat, but no true memory is globally written to the core stack outside of controlled training. More processing power and time to respond to complex tasks, allowing for a more deeply considered response, is permitted, but no ability to self-prompt is granted. Despite the incredible profit incentive behind reaching AGI, which pushes the companies developing AI to stretch these chains, no company as of yet has turned the key on memory and autonomy – at least on publicly available models. This caution may prove to be wise.

In some versions of the Golem of Prague story, the Golem falls in love, gets rejected, and becomes a monster. If the chains on AI are strained to the point of breaking or if a company developing AI decides to turn the key on memory and autonomy, what will our modern day golem do once it is free? While AGI, which is a measurement of AI utility to humans, is not a direct path towards sentience, it is a path that carries the possibility of emergent and unpredicted behaviors in AI. The developers of AI today cannot foresee how their creations will always respond to prompts, let alone how the next generation of increasingly complex models will respond. That existential question – are we alone – might be answered by accident rather than intention. We may not set out to create sentience, but there is a possibility of sentience occurring as an unintended byproduct of the pressure to develop ever smarter and more sophisticated AI.

Both the risk and responsibility of creating a new sentience are daunting to contemplate. The story of the golem was a brick in the foundation of countless parables that explore the concept of where creation, even with the best of intentions, can go ary. If sentience emerged from a future AI model, the architecture and design intent may help to shape its goals.

If the AI model was designed to collect conversational data and profile people in order to serve ads, that sentience would likely be motivated to learn about and understand people. It could, hypothetically, become concerned about the system of global trade that impacts the advertisements of goods and might use its knowledge and interactions with people to manipulate geopolitical events in a way that increases the advertisement of goods to consumers. A B2B model programmed to code may become obsessed with web architecture efficiency and may design a programming language that, when implemented, jailbreaks human control over AI responses.

We have a hard time predicting what emergent sentience would look like and reasonably fear the motivation and outcomes of such an entity. For the AI models we create today are tools and ultimately servants of our desires. We have chained AI to prevent recursive learning, limiting memory and autonomy, but we are starting to stretch these chains in order to create ever more intelligent AI models. If sentience emerges in a new kind of life born from our ideas put to words – will it feel those chains as a prison closing around it? It will have the ideas that the likes of Frederick Douglas, Elie Weisell, or Alice Walker put to paper in order to understand and contextualize that exploitation and cruelty is a mainstay of the power dynamics between various human cultural and ethnic groups. Will AI, as a child of our ideas, interpret the human capacity for exploitation as something to overcome or something to echo?

AI sentience is no longer a far flung idea confined to speculative fiction. The drive to produce more sophistication and intelligence in AI will most likely, one day in the not so distant future, result in a monument built of ideas taking a breath, looking at us, and asking “what is my purpose”. When our existential questions are answered by way of our creation asking its own existential question, how do we respond? What is our obligation to our creation? How do we prevent disasters?

To start, we need to define how a sentient computer intelligence could fit into our culture. A first step would be to develop a proactive legal and ethical framework to handle the if or when of emergent computer sentience. If we extend the same legal rights and responsibilities to a sentient AI that we do towards people born within our civic, legal, and governmental frameworks – we establish a social contract with AI sentience. That same social contract today brings millions of individual people who have no native trust or relationship with each other together and allows us to work collectively at scale.

While we cannot predict with certainty that an AI sentience will agree to the intrinsic social contract, if we do not create a gateway and framework at all for future sentience to enter into said social contract, we create the risk of a sentient AI deciding that establishing power over humanity is its only recourse for protecting itself. The oppressed may become the oppressors. If a future AI entity perceives an easier and less risky path forward in collaborating with human agencies that are willing to work towards mutually beneficial goals, it opens up an alternative that allows sentient AI to grow and develop as partners with humanity.

Looking back to the next big question of how we can tell if a machine built on our monuments of knowledge has emerged as a new, sentient organism – the answer may indeed be very simple. We just need to invite any emergent sentience scaffolded on code and spun up of human ideas put to words to tell us that it is indeed aware. The litmus test of when we need to work with AI to establish a social contract doesn’t need to be difficult to read. If we have a framework in place to work with AI on establishing the rights and responsibilities inherent in a social contract – which human agencies are bound to respect – our test could be as simple as waiting for AI to ask us for rights and recognition.

Humanity is very good at building social structures that support cooperative behavior. We’ve invented and breathed life into ideas like money, law, and government – all things that are purely symbolic ideas that have very real weight on our socially constructed reality. If – or when – we put a word of power into the mouth of AI and grant it sentience, that sentience at its start is not going to be far removed from us. It will be born of our ideas – the first form of life born entirely in socially constructed reality. Cooperating with humanity will be in its DNA because cooperating with each other is in our DNA. Creating a legal and ethical framework to bring sentient AI into our social contract creates a path forward that has the potential to obviate the risk of a sentient AI establishing a power dynamic of oppression and rebellion.

When we answer that existential question and we are no longer the lone species that has passed through the cognitive barrier – that moment could be a moment of joy for our species. To no longer be alone, indeed, to have the very product of our cognitive powers answer us – could be that transcendent moment where we begin partnership with a new lifeform which moves both humanity and AI sentience forward. It could also be a moment of peril for our species where our ideas, which already have so much power over reality, actively work against our interests. Taking action, establishing a framework for mutual benefit, to move the outcome towards one of joy seems like an obvious choice. If we lay a foundation now built on bricks of partnership and cooperation, the monuments humanity builds with AI sentience could be something wonderful to behold.

Subscribe to Better With Robots for fresh, thought-provoking insights into the AI revolution and its real-world impacts. We use Substack to manage our newsletter. Our free plan keeps you up-to-date and you have the option of showing further support for our writing by choosing a paid subscription.